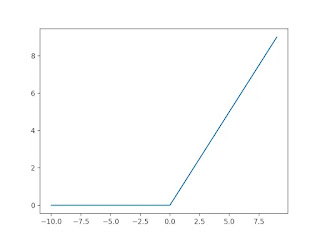

ReLU Activation Function

The Rectified Linear Unit (ReLU activation function) is a popular non-linear function used in artificial neural networks (ANNs) and deep learning models. It is designed to introduce non-linearity to the network, allowing it to learn and represent complex patterns and relationships in the data.

There are

Simplicity: ReLU is a simple and computationally efficient function to implement, as it involves only a simple thresholding operation.

Sparse activation: ReLU promotes sparse activation in the network,

meaning it activates only a subset of neurons while keeping the rest inactive. This sparsitycan reduce overfitting and improve generalization.Avoiding the vanishing gradient problem: ReLU helps mitigate the vanishing gradient

problem during training. Since ReLU does not saturate in the positive region, it does not sufferfrom gradient saturation that affects other activation functions like sigmoid or tanh.Faster convergence: ReLU has been found to accelerate

neural networks' convergence during training compared to other activation functions, particularly when combined with appropriate weight initialization and regularization techniques.

However, ReLU

Dead neurons: ReLU neurons can sometimes become "dead" and remain inactive, especially during training. If the neuron's output

turns negative and remains negative, the gradient flowing through it becomes zero, and the neuron does not learn further. This issue is often mitigated by usingReLU variants, such as Leaky ReLU or Parametric ReLU.Unbounded activation: ReLU does not have an upper bound

on activation values. This can sometimes lead to issues if the network is not properly normalized or regularized, as large activations can cause numerical instability or "exploding gradients" during training.

Given its simplicity and effectiveness, ReLU is widely used as an activation function in many deep-learning

Comments

Post a Comment