Root Mean Square Error

What is rmse? :-The error of a model in predicting quantitative data is often measured using the Root Mean Square Error (RMSE).

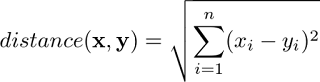

Let's try to investigate the mathematical justification for this measure of inaccuracy. The first thing we can see is a similarity to the formula for the Euclidean distance between two vectors in Rn, ignoring the division by n beneath the square root:

Heuristically, this suggests that RMSE may be seen as a distance between the vector of expected values and the vector of observed values.

But why are we doing this division by n here under the square root? The Euclidean distance is only scaled down by a factor of (1/n) if we maintain n (the number of observations) constant. It's a little difficult to see why this is the appropriate course of action, so let's go a little more.

Imagine that the following happens when we add random "errors" to each of the predicted values to create our observed values:

Considered random variables, these mistakes may have a Gaussian distribution with mean and standard deviation, but any other distribution with a square-integrable PDF (probability density function) would also work. We want to think of I as a fundamental physical number, such as the precise separation between Mars and the Sun at a specific moment. Our observed number Yi would therefore represent the measured distance between Mars and the Sun, with some mistakes resulting from telescope calibration issues and measurement noise caused by air interference.

The mean of our error distribution would represent a lingering bias resulting from calibration mistake, and the standard deviation would represent the amount of measurement noise. Imagine that we now have a precise understanding of the mean of the distribution of our mistakes and would like to project the standard deviation. With a little math, we can determine that:

Notice how familiar the left side appears to be! It is exactly our RMSE calculation from previously if we remove the expectation E[...] from within the square root. According to the central limit theorem, the variance of the variable I (i — yi)2 / n = I (i)2 / n should converge to zero as n increases. In fact, a sharper version of the central limit theorem states that its variance should asymptotically approach zero like 1/n. This demonstrates that I (i — yi)2 / n is a reliable estimate for E[i (i — yi)2 / n] = 2. However, RMSE is a reliable estimate of the standard deviation of our error distribution. rs!

Comments

Post a Comment