Activation Functions In Neural Network

Activation function in neural network are a veritably important element of neural networks in deep literacy. It helps us to determine the affair of a deep literacy model, its delicacy, and also the computational effectiveness of training a model. They also have a major effect on how the neural networks will meet and what will be the confluence speed. In some cases, the activation functions might also help neural networks from confluence. So, let’s understand the activation functions, types of activation functions & their significance and limitations in details.

What is the activation function?

Activation functions help us to determine the affair of a neural network. These types of functions are attached to each neuron in the neural network, and determines whether it should be actuated or not, grounded on whether each neuron’s input is applicable for the model’s vaticination. Activation function also helps us to homogenize the affair of each neuron to a range between 1 and 0 or between-1 and 1. As we know, occasionally the neural network is trained on millions of data points, So the activation function must be effective enough that it should be able of reducing the calculation time and ameliorate performance.

Let’s understand how it works?

In a neural network, inputs are fed into the neuron in the input subcaste. Where each neuron has a weight and multiplying the input number with the weight of each neuron gives the affair of the neurons, which is also transferred to the coming subcaste and this process continues. The affair can be represented as-

Y = ∑ (weights*input + bias)

Note The range of Y can be in between- perpetuity to perpetuity. So, to bring the affair into our asked vaticination or generalized results we've to pass this value from an activation function. The activation function is a type of fine “ gate” in between the input feeding the current neuron and its affair going to the coming subcaste. It can be as simple as a step function that turns the neuron affair on and off, depending on a rule or threshold what's handed. The final affair can be represented as shown below

Neural networks usenon-linear activation functions, which can help the network to learn complex data, cipher and learn nearly any function representing a question, and give accurate prognostications.

Why we need Activation Functions?

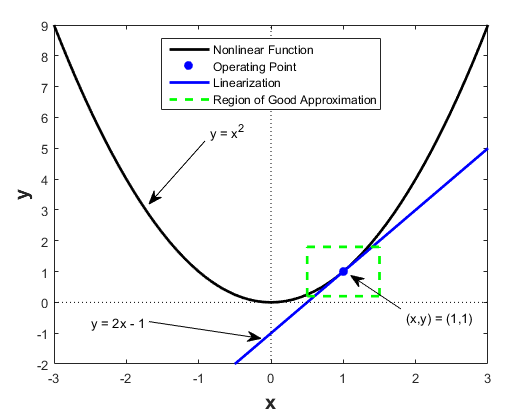

The core idea behind applying any activation functions is to bringnon-linearity into our deep literacy models. Non-linear functions are those which have a degree further than one, and they've a curve when we compass them as shown below.

We need to apply an activation function f (x) so as to make the network more important, add the capability to it to learn some data more complex and complicated in form, representnon-linear complex arbitrary functional mappings between inputs and labors. Hence using anon-linear activation, we're suitable to inducenon-linear mappings from inputs to labors. One of another important point of an activation function is that it should be differentiable. We need it to be differentiable because while performing backpropagation optimization strategy while propagating backwards in the network to cipher slants of error ( loss) with respect to weights and, thus, optimize weights using grade descent or any other optimization ways to reduce the error.

Comments

Post a Comment